This is part 4 of my series demonstrating my rebuild of my homelab in NixOS.

- Initial creation of the NixOSVM on Proxmox Part 1 .

- Enabling SSH access on NixOS Part 2 .

- Mounting a secondary drive on NixOS Part 3 .

- Decrypting boot early with initrd-ssh Part 4 . (This Part)

- Installing Docker on NixOS Part 5

Remote LUKS Unlocking for NixOS Homelab Systems

The Challenge with Encrypted Homelab Systems:

Running an encrypted NixOS system (or any encrypted Linux) in a homelab environment presents a specific challenge: entering the LUKS passphrase at boot time typically requires either physical access to the machine or access to a management console like Proxmox.

While this might seem fine if you think “my homelab isn’t managed remotely,” it creates limitations. What if you’re away from home when your system needs a reboot? What if you want more flexibility in how you manage your systems?

The Solution: SSH Access During Early Boot:

Fortunately, NixOS provides an elegant solution to this problem. You can configure your system to allow SSH access during the early boot process (initrd) before the root filesystem is mounted. This allows you to remotely enter your LUKS passphrase from anywhere.

“But how can I do that if there’s no way into my network externally?”

Don’t worry - later in this series, we’ll be adding Tailscale and configuring another node as a router, enabling secure remote access to your systems for entering passwords.

By the end of this guide, you’ll have a NixOS system that you can unlock remotely via SSH, eliminating the need for physical access or management console access during boot.

What is initrd?

Initrd is basically a mini operating system that loads before your main system starts. It lives in your computer’s memory and contains the essential tools needed to unlock your encrypted drive. Think of it as a starter engine that helps get your main engine running. By allowing SSH access during this early boot phase, you can remotely type in your password to unlock your encrypted system.

Security Considerations:

Before we start, it’s important to understand the security implications:

- The SSH host keys for early boot must be stored in plain text on an unencrypted partition.

- Theoretically, this means an attacker with physical access could potentially extract these keys and perform a man-in-the-middle attack.

- For truly sensitive systems, you should physically verify hardware integrity or use a TPM.

That said, for this homelab environment where the main goal is convenience while maintaining basic security, this approach is quite useful.

Prerequisites:

Before beginning, we will need a couple of things.

- A working NixOS installation with an encrypted root filesystem.

- SSH already configured for normal system access.

- Your network interface name (can be found using

ip aorifconfig). - Root access to the system.

- +Note+: Luckily if you have been following the steps in this series so far you will have these things already.

Step 1: Configure Early Boot Networking:

First, we need to enable networking in the initial ramdisk (initrd), to do this we need the following information:

- The networking interface of the VM.

- To include the required kernel modules for the network card.

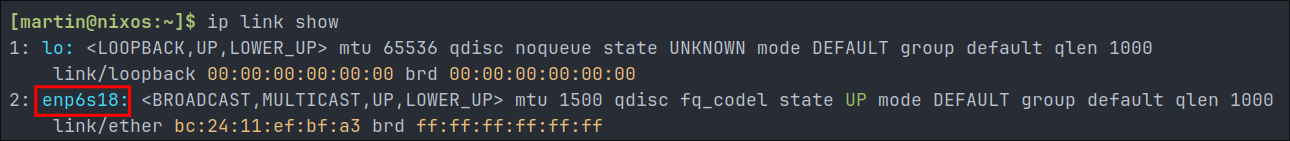

Discovering the network interface we are using:

- Luckily this information is easy to obtain we can run the below command.

ip link show

- From this we can see the device is

enp6s18

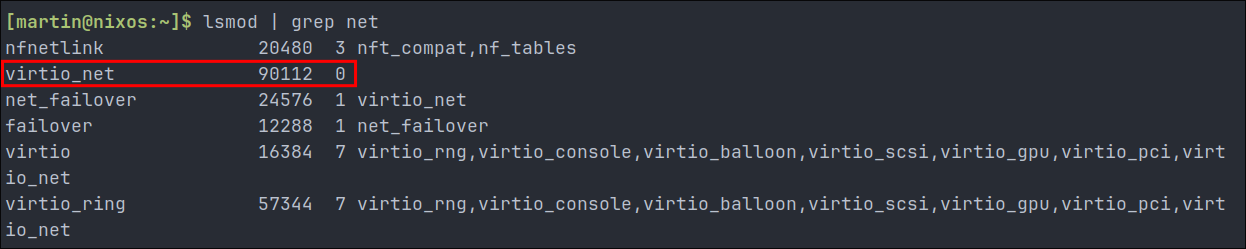

Determining Required Network Modules for NixOS Boot:

To enable networking during early boot, we need to identify which kernel modules are needed for our network interface to function. For Proxmox VMs using VirtIO networking (like ours), we can find these with:

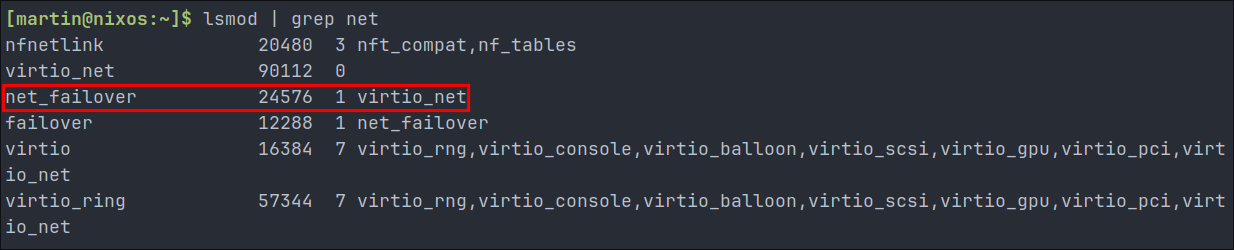

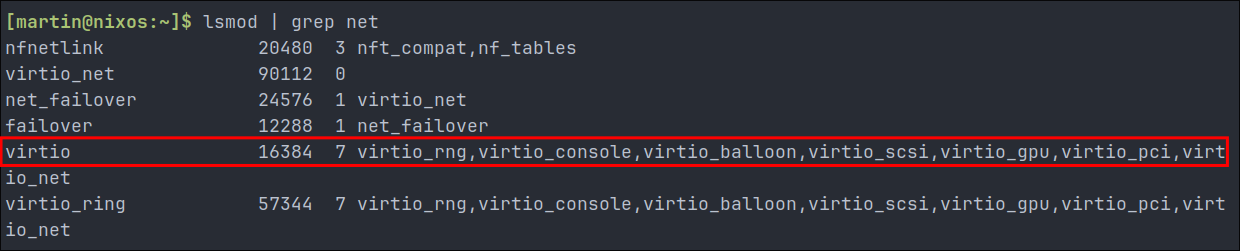

lsmod | grep net

Looking at the output:

[martin@nixos:~]$ lsmod | grep net

nfnetlink 20480 3 nft_compat,nf_tables

virtio_net 90112 0

net_failover 24576 1 virtio_net

failover 12288 1 net_failover

virtio 16384 7 virtio_rng,virtio_console,virtio_balloon,virtio_scsi,virtio_gpu,virtio_pci,virtio_net

virtio_ring 57344 7 virtio_rng,virtio_console,virtio_balloon,virtio_scsi,virtio_gpu,virtio_pci,virtio_net

From this, we can identify the key modules our VirtIO network interface depends on. We’ll need to include these in our NixOS configuration: (do not do this yet, we have a little more work to do.)

boot.initrd.availableKernelModules = [

"virtio_net" # Primary network driver

"virtio" # Base virtualization support

"virtio_pci" # PCI bus support for virtio

"net_failover" # Network failover capability

"failover" # Base failover functionality

];

These modules ensure your network interface will be properly initialized during the early boot process, allowing SSH access before the encrypted drive is mounted.

Understanding Module Dependencies (Optional):

For those interested in how we determined these modules, let’s break down the lsmod output:

- Module name (first column)

- Size in bytes (second column)

- Used by count (third column) - how many other modules or processes are using this module

- Used by list (fourth column) - which modules are depending on this module

The dependency tree looks like this:

Module Dependency Tree:

virtio_net <-----+ Primary network driver

| |

v |

net_failover | First-level dependencies

| |

v |

failover | Second-level dependencies

|

virtio ----------+ Virtualization base

|

+----> virtio_pci Required for PCI bus access

|

+----> virtio_ring Low-level support

(and other virtio modules)

Reading this dependency chain:

-

Primary Network Module:

virtio_net- This is your actual network driver- The “0” in the second column means no other modules depend on it

- But it appears in other modules’ dependency lists

-

First-Level Dependencies:

net_failoverdepends onvirtio_net(indicated by the “1” and “virtio_net” in its row)virtiohas 7 dependencies, includingvirtio_net

-

Second-Level Dependencies:

failoverdepends onnet_failovervirtio_pciis needed by the virtualization stackvirtio_ringprovides low-level support for all virtio modules

Adding The Modules To Our hardware-configuration.nix:

As we now have the name of our interface as well as the list of modules we can now modify our hardware-configuration.nix to include this information, so lets do it.

-

+Before we make any changes backup+

hardware-configuration.nixsudo cp /etc/nixos/hardware-configuration.nix /etc/nixos/hardware-configuration.nix.bak- This is just incase, we need to revert any changes etc, this will make it easier to do so.

-

Open

hardware-configuration.nix:

sudo nano /etc/hardware-configuration.nix

- +Note+: Just FYI, I know we are using nano, as a vim person this pains me but we have not gotten to the installing packages part, but once we do, it will be vim motion city.

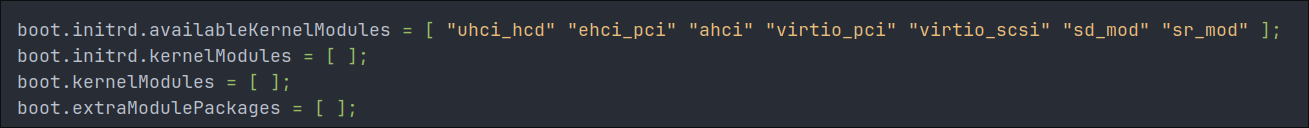

When you open the file you will see the that the boot.initrd.availableKernelModules list already has some entries like the image below, as you can see it already has virtio_pci so we can remove that from our list.

Don’t worry about the existing entries we are just going to add our additional kernel modules into this list, we are also going to split it across multiple lines so it’s a lot easier to read.

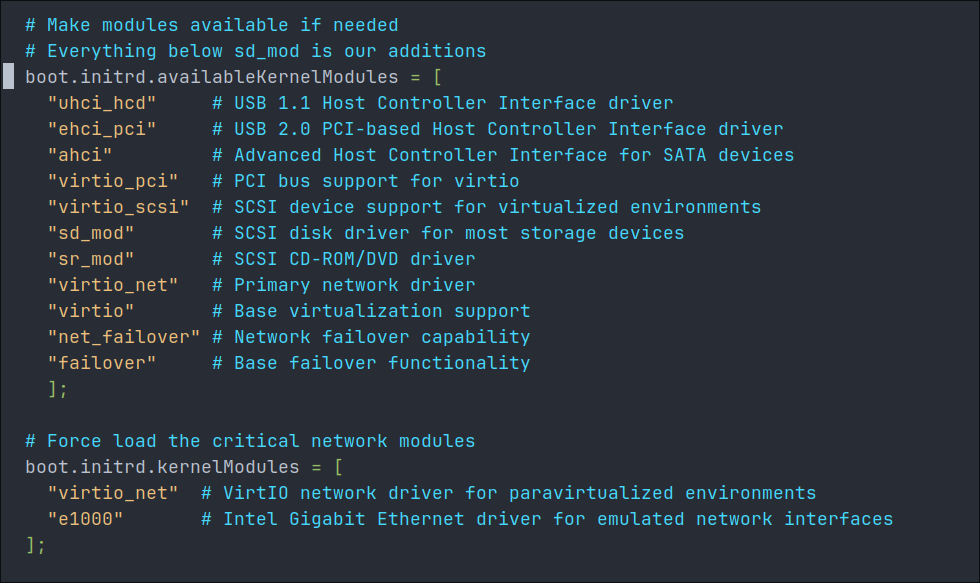

# Make modules available if needed

# Everything below sd_mod is our additions

boot.initrd.availableKernelModules = [

"uhci_hcd" # USB 1.1 Host Controller Interface driver

"ehci_pci" # USB 2.0 PCI-based Host Controller Interface driver

"ahci" # Advanced Host Controller Interface for SATA devices

"virtio_pci" # PCI bus support for virtio

"virtio_scsi" # SCSI device support for virtualized environments

"sd_mod" # SCSI disk driver for most storage devices

"sr_mod" # SCSI CD-ROM/DVD driver

"virtio_net" # Primary network driver

"virtio" # Base virtualization support

"net_failover" # Network failover capability

"failover" # Base failover functionality

];

Once you have made these changes save the file, but do not rebuild as we have more changes to make.

Force Loading Kernel Modules in hardware-configuration.nix:

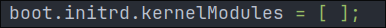

We are now going to force load some kernel modules. Again open up hardware-configuration.nix & you will see that the boot.initrd.kernelModules list is empty.

We are going to add the two entries below, again splitting it across multiple lines to make it more readable.

# Force load the critical network modules

boot.initrd.kernelModules = [

"virtio_net" # VirtIO network driver for paravirtualized environments

"e1000" # Intel Gigabit Ethernet driver for emulated network interfaces

];

Why use both availableKernelModules & kernelModules?

availableKernelModules: This makes the modules available to be loaded if needed. The system will only load them when it detects matching hardware.kernelModules: This forcibly loads the listed modules during the boot process, regardless of whether the hardware is detected.

By including both “virtio_net” and “e1000” in the kernelModules, we ensure network connectivity in virtually any VM configuration:

- If your VM uses VirtIO networking (most common in Proxmox for performance), the

virtio_netdriver will work. - If your VM uses emulated Intel e1000 networking, the e1000 driver will work.

This makes our configuration more robust and portable. Imagine, if you share this configuration or apply it to another VM with a different network device type, it should still work without modification.

- +Note+: This approach is especially important for remote LUKS decryption, where network access is critical and we don’t want to be locked out of our encrypted systems.

Your should now have the following entries in your hardware-configuration.nix:

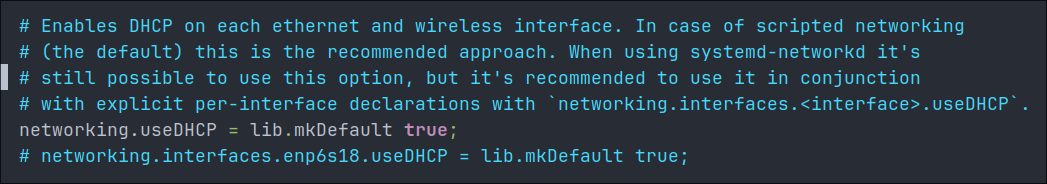

Configuring DHCP for Early Boot Networking:

Now we need to configure how our network interface obtains an IP address during early boot. For remote LUKS decryption to work reliably, we want to be very specific about our network configuration:

- We’ll disable global DHCP (which would affect all interfaces)

- Then enable it specifically for our known working interface, which you would have established earlier, in my case it’s

ens18.

This approach has several advantages:

- It prevents potential delays from NixOS trying to configure interfaces that don’t exist.

- It ensures we know exactly which interface is being used for network access.

- It provides more predictable behavior during the critical early boot phase.

- It follows the principle of least privilege by only enabling what we need.

The settings to alter/replace will most likely be at the bottom of your hardware-configuration.nix and look similar to the below.

Here are the settings we need to add/replace.

# Networking configuration

networking = {

# Disable global DHCP to prevent automatic configuration on all interfaces

useDHCP = lib.mkDefault false;

# Enable DHCP only for our specific interface that we need for early boot

interfaces.enp6s18.useDHCP = lib.mkDefault true;

};

- First we wrap everything in the

networkingblock.useDHCP = false- This disables automatic DHCP for all interfaces, giving us precise control over which interfaces use DHCP.

interfaces.ens18.useDHCP = true- This explicitly enables DHCP for only our known working interface.

Step 2: Generate SSH Host Key:

- First we need to generate a dedicated SSH host key for the initrd environment on the nixos host

- +IMPORTANT+: Remember this will be stored in plain text, so +don’t reuse your regular host keys!+

# Generate a new ed25519 key sudo ssh-keygen -t ed25519 -f /etc/ssh/initrd_ssh_host_ed25519_key- You do not need to give this a password.

- +IMPORTANT+: Remember this will be stored in plain text, so +don’t reuse your regular host keys!+

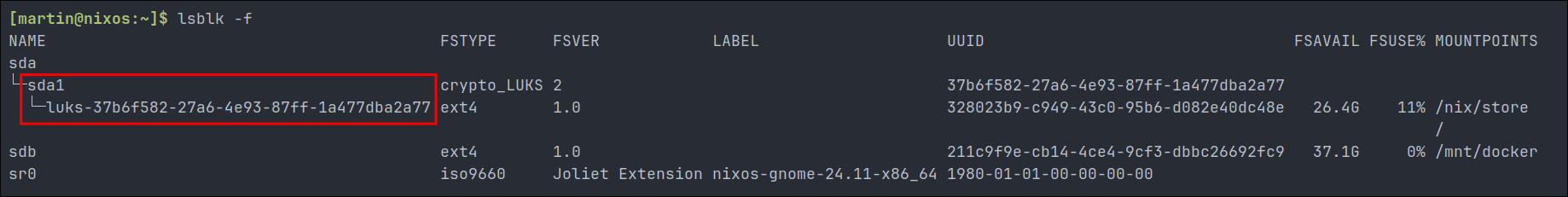

Step 3: Find the UUID of your LUKS encrypted partition:

For this to work we need to the UUID of the LUKS encrypted partition use the below command:

lsblk -f

- +Note+: Luckily it’s prefixed with

luksso it’s easy enough to spot. So from the image we can see on my host the UUID is37b6f582-27a6-4e93-87ff-1a477dba2a77

Step 4: Set Up SSH Server in initrd:

Now we have all the information we need so we can do the bulk of our configuration. First we’ll configure an SSH server to run during early boot and set up systemd services to handle LUKS decryption. This will be made in our /etc/nixos/hardware-configuration.nix file.

Below is what you will need to paste in:

- +Remember+:

- Replace the

authorizedKeyswith your own public key - Use your LUKS

UUIDfor the device path

- Replace the

boot.initrd = {

# Enable systemd in the initial ramdisk environment

systemd = {

enable = true;

# Specify which programs need to be available during early boot

initrdBin = with pkgs; [

cryptsetup # Tool needed for unlocking LUKS encrypted drives

];

# Configure networking using systemd's network manager

network = {

networks = {

"enp6s18" = { # Replace with your network interface name

matchConfig = {

Name = "enp6s18"; # Matches the network interface by name

};

networkConfig = {

DHCP = "yes"; # Enable DHCP to automatically get an IP address

};

};

};

};

# Define the service that will handle LUKS unlocking

services = {

unlock-luks = {

description = "Unlock LUKS encrypted root device";

# Make sure this service runs during boot

wantedBy = [ "initrd.target" ];

# Wait for network to be ready before trying to unlock

after = [ "network-online.target" ];

# Must unlock before trying to mount the root filesystem

before = [ "sysroot.mount" ];

# Ensure necessary tools are available

path = [ "/bin" ];

# Configure how the service behaves

serviceConfig = {

Type = "oneshot"; # Service runs once and exits

RemainAfterExit = true; # Consider service active even after it exits

SuccessExitStatus = [ 0 1 ]; # Both 0 and 1 are considered success

};

# The actual commands to unlock the drive

script = ''

echo "Waiting for LUKS unlock..."

# Try to unlock the encrypted drive

# The || true ensures the script doesn't fail if first attempt fails

cryptsetup open /dev/disk/by-uuid/YOUR-UUID-HERE root --type luks || true

'';

};

};

};

# Configure SSH access during early boot

network = {

enable = true;

ssh = {

enable = true;

port = 2222; # Use a non-standard port for security

# Only allow running the unlock service when connecting via SSH

authorizedKeys = [

''command="systemctl start unlock-luks.service" YOUR-SSH-KEY-HERE''

];

# Location of the SSH host key

hostKeys = [ "/etc/ssh/initrd_ssh_host_ed25519_key" ];

};

};

};

Understanding Each Part in Detail:

-

Basic Structure (

boot.initrd):- This is where we configure what happens during the early boot process

- The “initrd” is like a mini operating system that runs before your main system starts

- It’s responsible for getting your encrypted drive unlocked and ready

-

Systemd Configuration (

systemd = { ... }):- Systemd is the system manager that coordinates various services

- We’re telling it to:

- Be active during early boot (

enable = true) - Have the necessary tools available (

initrdBin) - Set up networking

- Create a service for unlocking the drive

- Be active during early boot (

-

Network Setup (

network = { ... }):- Uses systemd’s built-in network manager

- Configures your specific network card (replace “enp6s18” with your interface name)

- Enables DHCP to automatically get an IP address

- This ensures you can connect to your system over the network before it’s fully booted

-

The Unlock Service (

services.unlock-luks):- This is the service that actually unlocks your encrypted drive

- It has several important parts:

wantedBy = [ "initrd.target" ]- Makes sure it runs during bootafter/before- Controls when exactly it runsserviceConfig- How the service should behavescript- The actual commands to unlock the drive

-

SSH Configuration (

network.ssh):- Sets up secure remote access during early boot

- Uses port 2222 instead of the default 22 for better security

- The

command="..."prefix restricts what SSH users can do - Only allows running the unlock service, nothing else

-

Security Features:

- Non-standard SSH port (2222)

- Restricted commands through SSH

- Dedicated SSH host key for the early boot environment

- Service dependencies ensure proper ordering

-

Error Handling:

SuccessExitStatus = [ 0 1 ]- Accepts both successful outcomes|| trueat the end of the unlock command prevents fatal errorsRemainAfterExit = truekeeps track of the service state

This configuration creates a secure way to remotely unlock your encrypted drive while ensuring that:

- Only authorized users can connect

authorizedKeys

- The network is properly configured first

- Using systemd’s built in network manager.

- The unlock process happens at exactly the right time during boot

befor/after

- Failed attempts won’t break the boot process

SuccessExitStatus = [ 0 1 ]

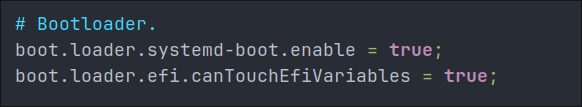

Step 5: Configure Your Boot Loader:

As discussed, the bootloader is the first program that runs when the computer starts & it’s responsible for loading the Linux kernel and initrd (initial ram disk), we need to add some additional configuration mainly for security reason.

Currently your bootloader configuration will look like this image

We are going to replace the above with the below, it is mostly the same however we are specifying how many configurations are available 10 and explicitly disabling the editor so users cannot drop into a root shell.

#Bootloader configuration

boot.loader = {

systemd-boot = {

enable = true;

configurationLimit = 10;

editor = false; # Disable editing boot entries for security

};

efi.canTouchEfiVariables = true; # Usually true for most systems

};

Understanding this configuration:

systemd-boot.enable = trueactivates this lightweight, modern bootloaderconfigurationLimit = 10keeps your boot menu clean by limiting stored configurationseditor = falseprevents attackers from bypassing security via boot parametersefi.canTouchEfiVariables = trueallows NixOS to update boot entries (set tofalsefor systems with problematic UEFI)

Step 6: Rebuild Your System:

After making these changes, rebuild your NixOS configuration:

sudo nixos-rebuild switch

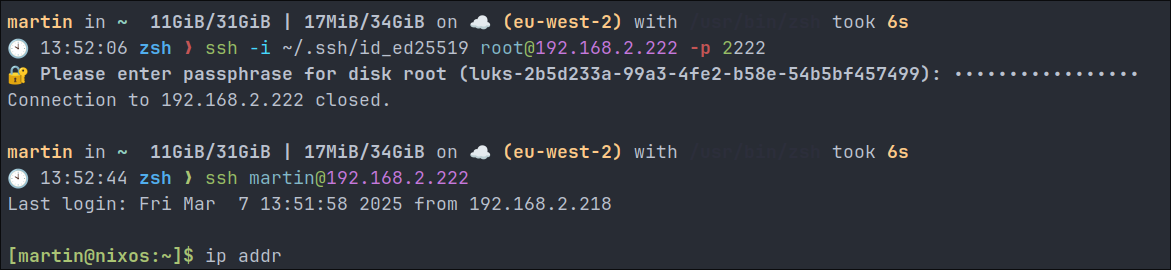

Step 7: Test Remote Decryption:

Now you can test your setup:

-

Reboot your system:

sudo reboot -

From another machine, SSH into your system during early boot:

ssh -p 2222 root@your-system-ip- +Note+: I would allow 30 seconds from the reboot signal being sent to try and SSH in, as you have to wait for the initial boot and NixOS version screen to pass.

-

You should be prompted to enter the LUKS passphrase

-

After entering the correct passphrase, the boot process will continue

If everything is set up correctly, the server will finish booting after you provide the passphrase and you can access it via ssh.

Troubleshooting

If you encounter issues check the below troubleshooting steps.

Can’t connect via SSH during early boot

- Verify your network settings are correct

- Check if the SSH port is open (no firewall blocking it)

nmap -p 2222 [ipOfHost]

- Ensure your network hardware is supported in the initrd

System doesn’t continue booting after entering the passphrase

- Check for errors in your LUKS configuration

- Verify the disk path is correct

- Try running the cryptsetup command manually during the SSH session

SSH host key warnings

- This is expected when connecting, as you’re using different host keys

- You can add the initrd host key to your known_hosts file or use:

ssh -p 2222 -o StrictHostKeyChecking=no root@your-system-ip

Systemd Service Issues

- Check service status:

systemctl status unlock-luks - View service logs:

journalctl -u unlock-luks - Verify service dependencies:

systemctl list-dependencies unlock-luks

Last resort revert to previous hardware configuration:

- Remember we made a copy of

hardware-configuration.nixwell you can just revert back to using this.

rm hardware-configuration.nix

mv hardware-configuration.nix.bak hardware-configuration.nix

sudo nixos-rebuild switch

sudo reboot now

Conclusion/Next Time:

You now have a NixOS system that can be remotely unlocked after rebooting, eliminating the need for physical access or console access through Proxmox. This setup is particularly useful for homelab environments where systems might need occasional reboots but aren’t easily physically accessible.

Remember that while this setup provides convenience, it does have security implications. The SSH host keys for the initrd are stored unencrypted, which could be a vulnerability if an attacker gains physical access to your system.

In the next post we will move onto installing packages and setting up docker.

Sign-off:

As always hack the planet homelab!