This is mainly being put here for reference for me. I will writeup nix instructions when I finally migrate my main system to nix, but at the moment this is on arch & I wanted to document the process for myself.

I am using an AMD gpu so this may differ for you:

Install AMD GPU backend packages:

sudo pacman -S rocminfo rocm-opencl-sdk rocm-hip-sdk rocm-ml-sdk

Install ollama with AMD GPU support.

yay -S ollama-rocm

Start Ollama:

ollama serve

Run open-webui via docker:

services:

open-webui:

build:

context: .

dockerfile: Dockerfile

image: ghcr.io/open-webui/open-webui:${WEBUI_DOCKER_TAG-main}

container_name: open-webui

volumes:

- ./open-webui:/app/backend/data

network_mode: host

environment:

- 'OLLAMA_BASE_URL=http://127.0.0.1:11434'

restart: unless-stopped

volumes:

open-webui: {}

Docker compose up -d

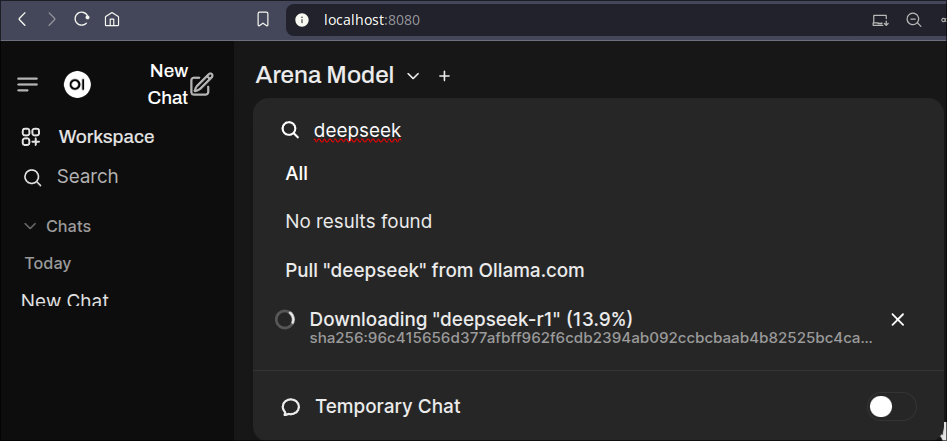

Access Open WebUI:

http://localhost:8080

Pull A Model Down:

System Requirements

- Minimum 8GB RAM (16GB+ recommended for larger models)

- GPU with at least 6GB VRAM for running medium-sized models

- Storage space depending on models (each model can be 3-8GB+)

Common Model Commands

# List all installed models

ollama list

# Remove a model

ollama rm model-name

# Get model information

ollama show model-name

# Run a model in CLI

ollama run model-name

Troubleshooting

- If GPU is not detected, ensure ROCm drivers are properly installed and configured

- Check logs with

journalctl -u ollama - Verify Ollama service status with

systemctl status ollama - Common ports used: 11434 (Ollama API), 8080 (Open WebUI)

Additional Resources

- Official Ollama documentation: https://github.com/ollama/ollama/tree/main/docs

- Model library: https://ollama.com/search

- GitHub repository: https://github.com/ollama/ollama

Introduction

This guide demonstrates how to run Large Language Models (LLMs) locally using Ollama with AMD GPU acceleration. While many guides focus on NVIDIA GPUs, this tutorial specifically covers AMD GPU setup using ROCm on Arch Linux. Running LLMs locally provides better privacy, reduced latency, and no API costs.

Performance Optimization

AMD GPU-Specific Settings

- Ensure ROCm is properly detecting your GPU:

rocminfo - Monitor GPU usage:

rocm-smi - Check GPU temperature:

sensors

Model Optimization

- Use quantized models (e.g., q4_0, q4_1) for better performance

- Adjust context length based on your VRAM

- Consider model size vs. performance tradeoffs